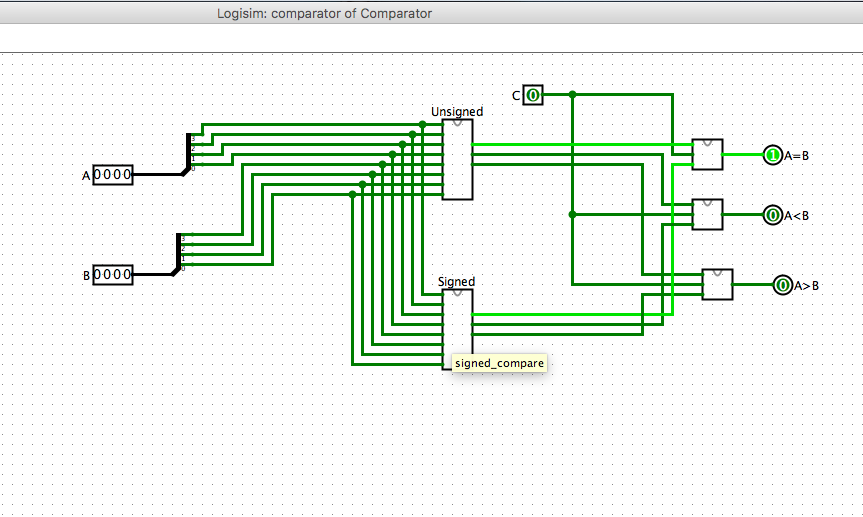

Sign And Magnitude Logisim

Screen shot of Logisim 2.7.0. Note: Further Logisim development is suspended indefinitely. More information (11 Oct 2014)Logisim is an educational tool for designing and simulating digital logic circuits. With its simple toolbar interface and simulation of circuits as you build them, it is simple enough to facilitate learning the most basic concepts related to logic circuits. Sign And Magnitude Logisim. Rule:Magnitude of acceleration = Change of velocity / TimeintervalIn linear motion, magnitude of acceleration is the.

In computing, signed number representations are required to encode negative numbers in binary number systems.

In mathematics, negative numbers in any base are represented by prefixing them with a minus sign ('−'). However, in computer hardware, numbers are represented only as sequences of bits, without extra symbols. The four best-known methods of extending the binary numeral system to represent signed numbers are: sign-and-magnitude, ones' complement, two's complement, and offset binary. Some of the alternative methods use implicit instead of explicit signs, such as negative binary, using the base −2. Corresponding methods can be devised for other bases, whether positive, negative, fractional, or other elaborations on such themes.

There is no definitive criterion by which any of the representations is universally superior. For integers, the representation used in most current computing devices is two's complement, although the Unisys ClearPath Dorado series mainframes use ones' complement.

History[edit]

The early days of digital computing were marked by a lot of competing ideas about both hardware technology and mathematics technology (numbering systems). One of the great debates was the format of negative numbers, with some of the era's most expert people having very strong and different opinions.[citation needed] One camp supported two's complement, the system that is dominant today. Another camp supported ones' complement, where any positive value is made into its negative equivalent by inverting all of the bits in a word. A third group supported 'sign & magnitude' (sign-magnitude), where a value is changed from positive to negative simply by toggling the word's sign (high-order) bit.

There were arguments for and against each of the systems. Sign & magnitude allowed for easier tracing of memory dumps (a common process in the 1960s) as small numeric values use fewer 1 bits. Internally, these systems did ones' complement math so numbers would have to be converted to ones' complement values when they were transmitted from a register to the math unit and then converted back to sign-magnitude when the result was transmitted back to the register. The electronics required more gates than the other systems – a key concern when the cost and packaging of discrete transistors were critical. IBM was one of the early supporters of sign-magnitude, with their 704, 709 and 709x series computers being perhaps the best-known systems to use it.

Ones' complement allowed for somewhat simpler hardware designs as there was no need to convert values when passed to and from the math unit. But it also shared an undesirable characteristic with sign-magnitude – the ability to represent negative zero (−0). Negative zero behaves exactly like positive zero; when used as an operand in any calculation, the result will be the same whether an operand is positive or negative zero. The disadvantage, however, is that the existence of two forms of the same value necessitates two rather than a single comparison when checking for equality with zero. Ones' complement subtraction can also result in an end-around borrow (described below). It can be argued that this makes the addition/subtraction logic more complicated or that it makes it simpler as a subtraction requires simply inverting the bits of the second operand as it is passed to the adder. The PDP-1, CDC 160 series, CDC 3000 series, CDC 6000 series, UNIVAC 1100 series, and the LINC computer use ones' complement representation.

Two's complement is the easiest to implement in hardware, which may be the ultimate reason for its widespread popularity.[1] Processors on the early mainframes often consisted of thousands of transistors – eliminating a significant number of transistors was a significant cost savings. Mainframes such as the IBM System/360, the GE-600 series,[2] and the PDP-6 and PDP-10 use two's complement, as did minicomputers such as the PDP-5 and PDP-8 and the PDP-11 and VAX. The architects of the early integrated circuit-based CPUs (Intel 8080, etc.) chose to use two's complement math. As IC technology advanced, virtually all adopted two's complement technology. x86,[3]m68k, Power ISA,[4]MIPS, SPARC, ARM, Itanium, PA-RISC, and DEC Alpha processors are all two's complement.

Signed magnitude representation[edit]

This representation is also called 'sign–magnitude' or 'sign and magnitude' representation. In this approach, a number's sign is represented with a sign bit: setting that bit (often the most significant bit) to 0 for a positive number or positive zero, and setting it to 1 for a negative number or negative zero. The remaining bits in the number indicate the magnitude (or absolute value). For example, in an eight-bit byte, only seven bits represent the magnitude, which can range from 0000000 (0) to 1111111 (127). Thus numbers ranging from −12710 to +12710 can be represented once the sign bit (the eighth bit) is added. For example, −4310 encoded in an eight-bit byte is 10101011 while 4310 is 00101011. Using signed magnitude representation has multiple consequences which makes them more intricate to implement:[5]

- There are two ways to represent zero, 00000000 (0) and 10000000 (−0).

- Addition and subtraction require different behaviour depending on the sign bit, whereas one's complement can ignore the sign bit and just do an end-around carry, and two's complement can ignore the sign bit and depend on the overflow behavior.

- Comparison also require inspecting the sign bit, whereas in two's complement, one can simply subtract the two numbers, and check if the outcome is positive or negative.

- The minimum negative number is −127 instead of −128 in the case of two's complement.

This approach is directly comparable to the common way of showing a sign (placing a '+' or '−' next to the number's magnitude). Some early binary computers (e.g., IBM 7090) use this representation, perhaps because of its natural relation to common usage. Signed magnitude is the most common way of representing the significand in floating-point values.

Ones' complement[edit]

| Binary value | Ones' complement interpretation | Unsigned interpretation |

|---|---|---|

| 00000000 | +0 | 0 |

| 00000001 | 1 | 1 |

| ⋮ | ⋮ | ⋮ |

| 01111101 | 125 | 125 |

| 01111110 | 126 | 126 |

| 01111111 | 127 | 127 |

| 10000000 | −127 | 128 |

| 10000001 | −126 | 129 |

| 10000010 | −125 | 130 |

| ⋮ | ⋮ | ⋮ |

| 11111101 | −2 | 253 |

| 11111110 | −1 | 254 |

| 11111111 | −0 | 255 |

Alternatively, a system known as ones' complement[6] can be used to represent negative numbers. The ones' complement form of a negative binary number is the bitwise NOT applied to it, i.e. the 'complement' of its positive counterpart. Like sign-and-magnitude representation, ones' complement has two representations of 0: 00000000 (+0) and 11111111 (−0).[7]

As an example, the ones' complement form of 00101011 (4310) becomes 11010100 (−4310). The range of signed numbers using ones' complement is represented by −(2N−1 − 1) to (2N−1 − 1) and ±0. A conventional eight-bit byte is −12710 to +12710 with zero being either 00000000 (+0) or 11111111 (−0).

To add two numbers represented in this system, one does a conventional binary addition, but it is then necessary to do an end-around carry: that is, add any resulting carry back into the resulting sum.[8] To see why this is necessary, consider the following example showing the case of the addition of −1 (11111110) to +2 (00000010):

In the previous example, the first binary addition gives 00000000, which is incorrect. The correct result (00000001) only appears when the carry is added back in.

A remark on terminology: The system is referred to as 'ones' complement' because the negation of a positive value x (represented as the bitwise NOT of x) can also be formed by subtracting x from the ones' complement representation of zero that is a long sequence of ones (−0). Two's complement arithmetic, on the other hand, forms the negation of x by subtracting x from a single large power of two that is congruent to +0.[9] Therefore, ones' complement and two's complement representations of the same negative value will differ by one.

Note that the ones' complement representation of a negative number can be obtained from the sign-magnitude representation merely by bitwise complementing the magnitude.

Two's complement[edit]

| Binary value | Two's complement interpretation | Unsigned interpretation |

|---|---|---|

| 00000000 | 0 | 0 |

| 00000001 | 1 | 1 |

| ⋮ | ⋮ | ⋮ |

| 01111110 | 126 | 126 |

| 01111111 | 127 | 127 |

| 10000000 | −128 | 128 |

| 10000001 | −127 | 129 |

| 10000010 | −126 | 130 |

| ⋮ | ⋮ | ⋮ |

| 11111110 | −2 | 254 |

| 11111111 | −1 | 255 |

The problems of multiple representations of 0 and the need for the end-around carry are circumvented by a system called two's complement. In two's complement, negative numbers are represented by the bit pattern which is one greater (in an unsigned sense) than the ones' complement of the positive value.

In two's-complement, there is only one zero, represented as 00000000. Negating a number (whether negative or positive) is done by inverting all the bits and then adding one to that result.[10] This actually reflects the ring structure on all integers modulo2N: . Addition of a pair of two's-complement integers is the same as addition of a pair of unsigned numbers (except for detection of overflow, if that is done); the same is true for subtraction and even for N lowest significant bits of a product (value of multiplication). For instance, a two's-complement addition of 127 and −128 gives the same binary bit pattern as an unsigned addition of 127 and 128, as can be seen from the 8-bit two's complement table.

An easier method to get the negation of a number in two's complement is as follows:

| Example 1 | Example 2 | |

|---|---|---|

| 1. Starting from the right, find the first '1' | 00101001 | 00101100 |

| 2. Invert all of the bits to the left of that '1' | 11010111 | 11010100 |

Method two:

- Invert all the bits through the number

- Add one

Example: for +2, which is 00000010 in binary (the ~ character is the Cbitwise NOT operator, so ~X means 'invert all the bits in X'):

- ~00000010 → 11111101

- 11111101 + 1 → 11111110 (−2 in two's complement)

Offset binary[edit]

| Binary value | Excess-128 interpretation | Unsigned interpretation |

|---|---|---|

| 00000000 | −128 | 0 |

| 00000001 | −127 | 1 |

| ⋮ | ⋮ | ⋮ |

| 01111111 | −1 | 127 |

| 10000000 | 0 | 128 |

| 10000001 | 1 | 129 |

| ⋮ | ⋮ | ⋮ |

| 11111111 | +127 | 255 |

Offset binary, also called excess-K or biased representation, uses a pre-specified number K as a biasing value. A value is represented by the unsigned number which is K greater than the intended value. Thus 0 is represented by K, and −K is represented by the all-zeros bit pattern. This can be seen as a slight modification and generalization of the aforementioned two's-complement, which is virtually the excess-(2N−1) representation with negatedmost significant bit.

Biased representations are now primarily used for the exponent of floating-point numbers. The IEEE 754 floating-point standard defines the exponent field of a single-precision (32-bit) number as an 8-bit excess-127 field. The double-precision (64-bit) exponent field is an 11-bit excess-1023 field; see exponent bias. It also had use for binary-coded decimal numbers as excess-3.

Base −2[edit]

In conventional binary number systems, the base, or radix, is 2; thus the rightmost bit represents 20, the next bit represents 21, the next bit 22, and so on. However, a binary number system with base −2 is also possible.The rightmost bit represents (−2)0 = +1, the next bit represents (−2)1 = −2, the next bit (−2)2 = +4 and so on, with alternating sign. The numbers that can be represented with four bits are shown in the comparison table below.

The range of numbers that can be represented is asymmetric. If the word has an even number of bits, the magnitude of the largest negative number that can be represented is twice as large as the largest positive number that can be represented, and vice versa if the word has an odd number of bits.

Comparison table[edit]

The following table shows the positive and negative integers that can be represented using four bits.

| Decimal | Unsigned | Sign and magnitude | Ones' complement | Two's complement | Excess-8 (biased) | Base −2 |

|---|---|---|---|---|---|---|

| +16 | N/A | N/A | N/A | N/A | N/A | N/A |

| +15 | 1111 | N/A | N/A | N/A | N/A | N/A |

| +14 | 1110 | N/A | N/A | N/A | N/A | N/A |

| +13 | 1101 | N/A | N/A | N/A | N/A | N/A |

| +12 | 1100 | N/A | N/A | N/A | N/A | N/A |

| +11 | 1011 | N/A | N/A | N/A | N/A | N/A |

| +10 | 1010 | N/A | N/A | N/A | N/A | N/A |

| +9 | 1001 | N/A | N/A | N/A | N/A | N/A |

| +8 | 1000 | N/A | N/A | N/A | N/A | N/A |

| +7 | 0111 | 0111 | 0111 | 0111 | 1111 | N/A |

| +6 | 0110 | 0110 | 0110 | 0110 | 1110 | N/A |

| +5 | 0101 | 0101 | 0101 | 0101 | 1101 | 0101 |

| +4 | 0100 | 0100 | 0100 | 0100 | 1100 | 0100 |

| +3 | 0011 | 0011 | 0011 | 0011 | 1011 | 0111 |

| +2 | 0010 | 0010 | 0010 | 0010 | 1010 | 0110 |

| +1 | 0001 | 0001 | 0001 | 0001 | 1001 | 0001 |

| +0 | 0000 | 0000 | 0000 | 0000 | 1000 | 0000 |

| −0 | 1000 | 1111 | ||||

| −1 | N/A | 1001 | 1110 | 1111 | 0111 | 0011 |

| −2 | N/A | 1010 | 1101 | 1110 | 0110 | 0010 |

| −3 | N/A | 1011 | 1100 | 1101 | 0101 | 1101 |

| −4 | N/A | 1100 | 1011 | 1100 | 0100 | 1100 |

| −5 | N/A | 1101 | 1010 | 1011 | 0011 | 1111 |

| −6 | N/A | 1110 | 1001 | 1010 | 0010 | 1110 |

| −7 | N/A | 1111 | 1000 | 1001 | 0001 | 1001 |

| −8 | N/A | N/A | N/A | 1000 | 0000 | 1000 |

| −9 | N/A | N/A | N/A | N/A | N/A | 1011 |

| −10 | N/A | N/A | N/A | N/A | N/A | 1010 |

| −11 | N/A | N/A | N/A | N/A | N/A | N/A |

Same table, as viewed from 'given these binary bits, what is the number as interpreted by the representation system':

| Binary | Unsigned | Sign and magnitude | Ones' complement | Two's complement | Excess-8 | Base −2 |

|---|---|---|---|---|---|---|

| 0000 | 0 | 0 | 0 | 0 | −8 | 0 |

| 0001 | 1 | 1 | 1 | 1 | −7 | 1 |

| 0010 | 2 | 2 | 2 | 2 | −6 | −2 |

| 0011 | 3 | 3 | 3 | 3 | −5 | −1 |

| 0100 | 4 | 4 | 4 | 4 | −4 | 4 |

| 0101 | 5 | 5 | 5 | 5 | −3 | 5 |

| 0110 | 6 | 6 | 6 | 6 | −2 | 2 |

| 0111 | 7 | 7 | 7 | 7 | −1 | 3 |

| 1000 | 8 | −0 | −7 | −8 | 0 | −8 |

| 1001 | 9 | −1 | −6 | −7 | 1 | −7 |

| 1010 | 10 | −2 | −5 | −6 | 2 | −10 |

| 1011 | 11 | −3 | −4 | −5 | 3 | −9 |

| 1100 | 12 | −4 | −3 | −4 | 4 | −4 |

| 1101 | 13 | −5 | −2 | −3 | 5 | −3 |

| 1110 | 14 | −6 | −1 | −2 | 6 | −6 |

| 1111 | 15 | −7 | −0 | −1 | 7 | −5 |

Other systems[edit]

Google's Protocol Buffers 'zig-zag encoding' is a system similar to sign-and-magnitude, but uses the least significant bit to represent the sign and has a single representation of zero. This allows a variable-length quantity encoding intended for nonnegative (unsigned) integers to be used efficiently for signed integers.[11]

Another approach is to give each digit a sign, yielding the signed-digit representation. For instance, in 1726, John Colson advocated reducing expressions to 'small numbers', numerals 1, 2, 3, 4, and 5. In 1840, Augustin Cauchy also expressed preference for such modified decimal numbers to reduce errors in computation.

See also[edit]

References[edit]

- ^Choo, Hunsoo; Muhammad, K.; Roy, K. (February 2003). 'Two's complement computation sharing multiplier and its applications to high performance DFE'. IEEE Transactions on Signal Processing. 51 (2): 458–469. doi:10.1109/TSP.2002.806984.

- ^GE-625 / 635 Programming Reference Manual. General Electric. January 1966. Retrieved August 15, 2013.

- ^Intel 64 and IA-32 Architectures Software Developer’s Manual(PDF). Intel. Section 4.2.1. Retrieved August 6, 2013.

- ^Power ISA Version 2.07. Power.org. Section 1.4. Retrieved August 6, 2013.,

- ^Bacon, Jason W. (2010–2011). 'Computer Science 315 Lecture Notes'. Retrieved 21 February 2020.

- ^US 4484301, 'Array multiplier operating in one's complement format', issued 1981-03-10

- ^US 6760440, 'One's complement cryptographic combiner', issued 1999-12-11

- ^Shedletsky, John J. (1977). 'Comment on the Sequential and Indeterminate Behavior of an End-Around-Carry Adder'(PDF). IEEE Transactions on Computers. 26 (3): 271–272. doi:10.1109/TC.1977.1674817.

- ^Donald Knuth: The Art of Computer Programming, Volume 2: Seminumerical Algorithms, chapter 4.1

- ^Thomas Finley (April 2000). 'Two's Complement'. Cornell University. Retrieved 15 September 2015.

- ^Protocol Buffers: Signed Integers

- Ivan Flores, The Logic of Computer Arithmetic, Prentice-Hall (1963)

- Israel Koren, Computer Arithmetic Algorithms, A.K. Peters (2002), ISBN1-56881-160-8

| Part of a series on | |

| arithmetic logic circuits | |

|---|---|

| Quick navigation | |

|

A binary multiplier is an electronic circuit used in digital electronics, such as a computer, to multiply two binary numbers. It is built using binary adders.

A variety of computer arithmetic techniques can be used to implement a digital multiplier. Most techniques involve computing a set of partial products, and then summing the partial products together. This process is similar to the method taught to primary schoolchildren for conducting long multiplication on base-10 integers, but has been modified here for application to a base-2 (binary) numeral system.

History[edit]

Between 1947-1949 Arthur Alec Robinson worked for English Electric Ltd, as a student apprentice, and then as a development engineer. Crucially during this period he studied for a PhD degree at the University of Manchester, where he worked on the design of the hardware multiplier for the early Mark 1 computer.[3]However, until the late 1970s, most minicomputers did not have a multiply instruction, and so programmers used a 'multiply routine'[1][2]which repeatedly shifts and accumulates partial results,often written using loop unwinding. Mainframe computers had multiply instructions, but they did the same sorts of shifts and adds as a 'multiply routine'.

Early microprocessors also had no multiply instruction. Though the multiply instruction is usually associated with the 16-bit microprocessor generation,[3] at least two 'enhanced' 8-bit micro have a multiply instruction: the Motorola 6809, introduced in 1978,[4][5] and Intel MCS-51 family, developed in 1980, and later the modern Atmel AVR 8-bit microprocessors present in the ATMega, ATTiny and ATXMega microcontrollers.

Download google chrome latest version for mac. Get more done with the new Google Chrome. A more simple, secure, and faster web browser than ever, with Google’s smarts built-in. Download now.

As more transistors per chip became available due to larger-scale integration, it became possible to put enough adders on a single chip to sum all the partial products at once, rather than reuse a single adder to handle each partial product one at a time.

Because some common digital signal processing algorithms spend most of their time multiplying, digital signal processor designers sacrifice a lot of chip area in order to make the multiply as fast as possible; a single-cycle multiply–accumulate unit often used up most of the chip area of early DSPs.

Basics[edit]

The method taught in school for multiplying decimal numbers is based on calculating partial products, shifting them to the left and then adding them together. The most difficult part is to obtain the partial products, as that involves multiplying a long number by one digit (from 0 to 9):

Binary numbers[edit]

A binary computer does exactly the same multiplication as decimal numbers do, but with binary numbers. In binary encoding each long number is multiplied by one digit (either 0 or 1), and that is much easier than in decimal, as the product by 0 or 1 is just 0 or the same number. Therefore, the multiplication of two binary numbers comes down to calculating partial products (which are 0 or the first number), shifting them left, and then adding them together (a binary addition, of course):

This is much simpler than in the decimal system, as there is no table of multiplication to remember: just shifts and adds.

This method is mathematically correct and has the advantage that a small CPU may perform the multiplication by using the shift and add features of its arithmetic logic unit rather than a specialized circuit. The method is slow, however, as it involves many intermediate additions. These additions take a lot of time. Faster multipliers may be engineered in order to do fewer additions; a modern processor can multiply two 64-bit numbers with 6 additions (rather than 64), and can do several steps in parallel.[citation needed]

The second problem is that the basic school method handles the sign with a separate rule ('+ with + yields +', '+ with − yields −', etc.). Modern computers embed the sign of the number in the number itself, usually in the two's complement representation. That forces the multiplication process to be adapted to handle two's complement numbers, and that complicates the process a bit more. Similarly, processors that use ones' complement, sign-and-magnitude, IEEE-754 or other binary representations require specific adjustments to the multiplication process.

Unsigned numbers[edit]

For example, suppose we want to multiply two unsigned eight bit integers together: a[7:0] and b[7:0]. We can produce eight partial products by performing eight one-bit multiplications, one for each bit in multiplicand a:

where {8{a[0]}} means repeating a[0] (the 0th bit of a) 8 times (Verilog notation).

To produce our product, we then need to add up all eight of our partial products, as shown here:

In other words, P[15:0] is produced by summing p0, p1 << 1, p2 << 2, and so forth, to produce our final unsigned 16-bit product.

Signed integers[edit]

If b had been a signed integer instead of an unsigned integer, then the partial products would need to have been sign-extended up to the width of the product before summing. If a had been a signed integer, then partial product p7 would need to be subtracted from the final sum, rather than added to it.

The above array multiplier can be modified to support two's complement notation signed numbers by inverting several of the product terms and inserting a one to the left of the first partial product term:

Where ~p represents the complement (opposite value) of p.

There are a lot of simplifications in the bit array above that are not shown and are not obvious. The sequences of one complemented bit followed by noncomplemented bits are implementing a two's complement trick to avoid sign extension. The sequence of p7 (noncomplemented bit followed by all complemented bits) is because we're subtracting this term so they were all negated to start out with (and a 1 was added in the least significant position). For both types of sequences, the last bit is flipped and an implicit -1 should be added directly below the MSB. When the +1 from the two's complement negation for p7 in bit position 0 (LSB) and all the -1's in bit columns 7 through 14 (where each of the MSBs are located) are added together, they can be simplified to the single 1 that 'magically' is floating out to the left. For an explanation and proof of why flipping the MSB saves us the sign extension, see a computer arithmetic book.[6]

Floating point numbers[edit]

A binary floating number contains a sign bit, significant bits (known as the significand) and exponent bits (for simplicity, we don't consider base and combination field). The sign bits of each operand are XOR'd to get the sign of the answer. Then, the two exponents are added to get the exponent of the result. Finally, multiplication of each operand's significand will return the significand of the result. However, if the result of the binary multiplication is higher then the total number of bits for a specific precision (e.g. 32, 64, 128), rounding is required and the exponent is changed appropriately.

Implementations[edit]

Older multiplier architectures employed a shifter and accumulator to sum each partial product, often one partial product per cycle, trading off speed for die area. Modern multiplier architectures use the (Modified) Baugh–Wooley algorithm,[7][8][9][10]Wallace trees, or Dadda multipliers to add the partial products together in a single cycle. The performance of the Wallace tree implementation is sometimes improved by modifiedBooth encoding one of the two multiplicands, which reduces the number of partial products that must be summed.

Example circuits[edit]

See also[edit]

- BKM algorithm for complex logarithms and exponentials

- Kochanski multiplication for modular multiplication

References[edit]

- ^'The Evolution of Forth' by Elizabeth D. Rather et al.[1][2]

- ^'Interfacing a hardware multiplier to a general-purpose microprocessor'

- ^M. Rafiquzzaman (2005). Fundamentals of Digital Logic and Microcomputer Design. John Wiley & Sons. p. 251. ISBN978-0-47173349-2.

- ^Krishna Kant (2007). Microprocessors and Microcontrollers: Architecture, Programming and System Design 8085, 8086, 8051, 8096. PHI Learning Pvt. Ltd. p. 57. ISBN9788120331914.

- ^Krishna Kant (2010). Microprocessor-Based Agri Instrumentation. PHI Learning Pvt. Ltd. p. 139. ISBN9788120340862.

- ^Parhami, Behrooz, Computer Arithmetic: Algorithms and Hardware Designs, Oxford University Press, New York, 2000 (ISBN0-19-512583-5, 490 + xx pp.)

- ^Baugh, Charles Richmond; Wooley, Bruce A. (December 1973). 'A Two's Complement Parallel Array Multiplication Algorithm'. IEEE Transactions on Computers. C-22 (12): 1045–1047. doi:10.1109/T-C.1973.223648.

- ^Hatamian, Mehdi; Cash, Glenn (1986). 'A 70-MHz 8-bit×8-bit parallel pipelined multiplier in 2.5-µm CMOS'. IEEE Journal of Solid-State Circuits. 21 (4): 505–513. doi:10.1109/jssc.1986.1052564.

- ^Gebali, Fayez (2003). 'Baugh–Wooley Multiplier'(PDF). University of Victoria, CENG 465 Lab 2. Archived(PDF) from the original on 2018-04-14. Retrieved 2018-04-14.

- ^Reynders, Nele; Dehaene, Wim (2015). Ultra-Low-Voltage Design of Energy-Efficient Digital Circuits. Analog Circuits And Signal Processing (ACSP) (1 ed.). Cham, Switzerland: Springer International Publishing AG Switzerland. doi:10.1007/978-3-319-16136-5. ISBN978-3-319-16135-8. ISSN1872-082X. LCCN2015935431.

- Hennessy, John L.; Patterson, David A. (1990). 'Section A.2, section A.9'. Computer Architecture: A quantitative Approach. Morgan Kaufmann Publishers, Inc. pp. A–3.A–6, A–39.A–49. ISBN978-0-12383872-8.

External links[edit]

- Multiplier Designs targeted at FPGAs